Browser automation has come a long way. In its earliest days, browser automation meant writing brittle, hard-coded scripts meant to repeat keyboard strokes or mouse clicks. These scripts saved time, but they broke the moment an interface changed, lacked any sense of context, and offered zero human-like interaction. Today, the possibilities are expansive. Modern browser automation ranges from simple JavaScript snippets that click a button to autonomous agents that can analyze web pages, recover from errors, and even call APIs for assistance. Agents represent the most significant leap forward yet. Once-fragile scripts have now become reliable, self-correcting systems.

In the follow article we trace the timeline behind the evolution of browser automation, showing how each generation of tooling solved common browser tasks and introduced a new set of possibilities.

Rise of Scripting Languages

As web applications grew more complex, simple scripts were no longer meeting the needs of increasingly sophisticated software testing needs. To ensure their applications worked as expected, developers turned to programming languages like Python, which quickly became the foundation of modern web automation.

Python's minimal syntax and expansive library catalog (e.g. Requests, BeautifulSoup) made it perfect for quick automation. The Selenium-Python bindings let developers create simple, straightforward automations like the button click example below.

from selenium import webdriver

driver = webdriver.Firefox()

driver.get("https://example.com")

driver.find_element("id", "submit").click()But one common problem was still appearing across all these early web automations. New UI changes led to scripts being out of date, because a mismatch in a selector meant the automation could no longer execute successfully. Promising yet too brittle for any website being updated frequently.

Browser automation moves to the native language of the web: JavaScript

Because JavaScript already executes in the browser, it became the natural glue for browser-side tweaks:

// GreaseMonkey userscript: auto-select dark-mode

document.querySelector('#darkMode').click();JavaScript made it so that scripts interacting with browser elements were faster and easier to write.

New JavaScript-based testing frameworks emerged from this trend. For example, Node.js frameworks such as Nightwatch and WebdriverIO became well-established as go-to tools for testing web apps as users would experience them.

But tests were still deterministic; any unexpected interactions or changes to a layout sent these tools crashing.

GUI Automation and Desktop Bots

As automation matured, the focus of true business workflow automations shifted from scripts to graphical interfaces. GUI-based tools made browser automations much more prevalent. With a GUI-based macro builder, users could press "Record", click around, and then replay the macro.

- AutoHotkey (Windows) gave power users a terse scripting language for hotkeys, window management, and text expansion.

- Keyboard Maestro (Mac) offered drag-and-drop actions, triggers, and conditional logic— no code required.

- iMacros and later UI.Vision brought the same idea into the browser, letting anyone save form-filling or data-extraction sequences.

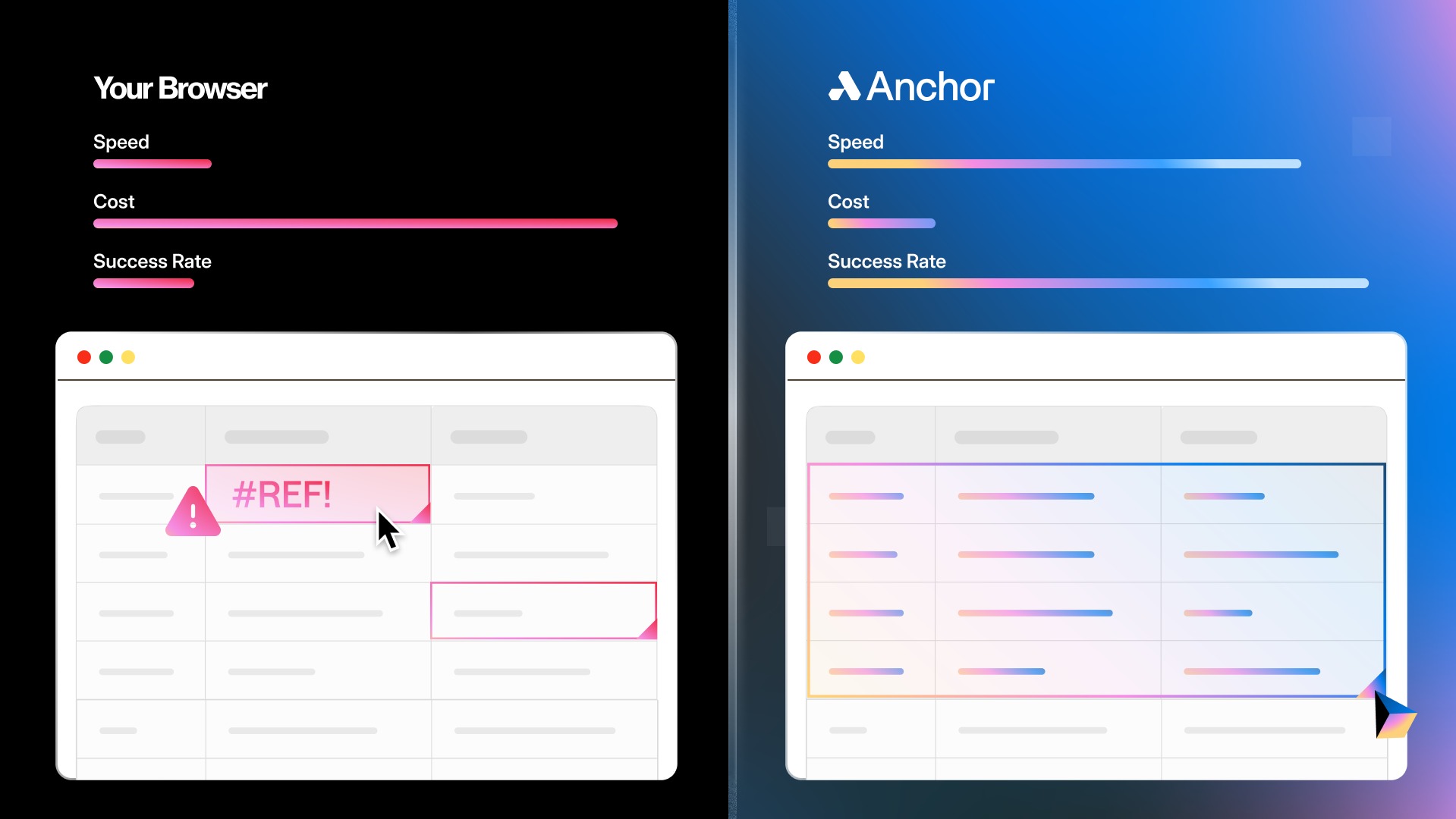

Fragility persists

GUI-based platforms expanded automation beyond developers, but they faced a persisting limitation, fragility.

These tools depend on pixel positions, window titles, or static XPath selectors. A single UI pixel shift, OS-theme change, or redesigned login screen could break an entire macro. Error-handling was minimal: either the click happened or the script stopped. As software development tools evolved to let developers deploy changes more frequently (and thus more breaking scripts), so too did their automation tools evolve.

Web Automation and Headless Browsers

The next leap in automation came with headless browsers. Frameworks like Puppeteer (for Chrome/Chromium) and Playwright (cross-browser: Chrome, Firefox, and WebKit) gave developers programmatic control over every aspect of the browser. Instead of simply clicking on buttons or recording macros, automation scripts could:

- Query and manipulate the DOM (Document Object Model)

- Intercept and modify network requests

- Capture console logs and performance metrics

- Handle multi-page navigation, sessions, and cookies with precision

Unlike GUI bots that simulated clicks and keystrokes, headless browsers exposed the browser's internal APIs directly, enabling faster, sophisticated, and more reliable automation.

Headless browser-based tools unlocked four massive gains for developers:

- Speed – No pixels to paint; tests finish faster.

- Parallelism – Containers or serverless functions can spin up dozens of instances.

- Observability – DevTools Protocol exposes network, console, performance, and security tools.

- CI/CD integration – Reproducible, deterministic, and easy to pack into a Docker file.

Examples

Puppeteer

const puppeteer = require("puppeteer");

(async () => {

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

await page.goto("https://google.com");

console.log("Page Title:", await page.title());

await browser.close();

})();Playwright

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

page.goto("https://example.com")

print(page.title())

browser.close()Despite the increase in speed and browser interaction capabilities, they were still primarily deterministic scripts. If a selector changed, the scripts failed, and someone had to update the script to get it working again.

Note: the difference between Headless and Headful browsers has become minimal with time, and now most of the browser automation platforms provide both Headless and Headful Modes. For more information, take a look at Choosing Headful over Headless Browsers.

Browser Agents and Intelligent Automation

Brittle scripts: a long standing pain for millions of developers over the years led to the emergence of the browser agent. Developers not only wanted speed and complete interaction capabilities, they wanted something that could interact with a webpage as a normal reasoning human being would. A tool that would be able to overcome trivial code changes in the browser. Browser agents stepped in to solve this pain.

What is a browser agent?

A browser agent couples a browser instance with an AI reasoning core (usually an LLM) so it can pursue a goal rather than follow a rigid recipe.